eCommerce A/B Testing: A Complete Guide for Merchants

Every day, thousands of eCommerce store owners ask the same question: “Why aren’t more visitors converting into customers?”.

The quickest way to detect is to check whether you’ve the below boxes ticked:

- high-qualify products

- attractive product photography,

- written compelling descriptions,

- optimized your site speed

…. and more.

If all the things have already been taken care of, the last thing that remains is—Conversion rate optimization (CRO)! And to perfectly fine-tune your website for better conversion rates, what you mainly need is “eCommerce A/B Testing”.

I doubt you might be conceptually aware of the term, but might not be aware of it practically.

So, this comprehensive guide will help you identify high-impact testing opportunities and interpret results correctly to drive meaningful improvements in your conversion rates.

Let’s quickly begin the read.

What is A/B Testing in eCommerce?

A/B testing (also known as split testing) is a methodical approach to comparing two versions of a webpage or app element to determine which performs better for your business goals.

If you’re trying on different outfits before deciding which looks best for an important event—except here, the “best look” is measured in conversions, revenue, and customer satisfaction.

Unlike general A/B testing, eCommerce website testing focuses specifically on optimizing the purchase journey.

How does A/B Testing work?

A/B testing works by:

- Creating two versions of your content: Version A (the current version) and Version B (the variant with a modified element)

- Randomly dividing your site visitors between these versions

- Measuring how users interact with each version

- Analyzing the data to determine which version achieves your goals more effectively

For example, an online shoe retailer might test whether displaying customer reviews directly on product cards increases the add-to-cart rate compared to showing reviews only on the product detail page.

In a nutshell, website A/B Testing is a data-driven approach that removes guesswork from your design and functionality decisions and helps you to optimize your store based on actual customer behavior.

That’s why you should always prioritize this method rather than assumptions or industry “best practices” that might not apply to your specific audience.

In the next section, we’ll explore why implementing A/B testing is essential for growing your eCommerce business and staying competitive in an increasingly crowded industry.

Why eCommerce A/B Testing Matters?

Analytics alone tell you what is happening on your site, but A/B tests reveal why it’s happening.

A/B testing can transform your gut feelings into concrete evidence and give you a reliable compass for your eCommerce decisions. So, when optimizing your eCommerce site, A/B Testing is something you should never ignore.

Here we’ll discuss more reasons why you should consider it for better eCommerce CRO.

1# Reduces financial risk

Instead of implementing changes across your entire site based on assumptions, you test with a segment of your traffic first. This approach lets you validate ideas before full commitment, protecting you from costly mistakes.

2# Solves real customer problems

What works for one store might not work for another. A/B testing shows you exactly what your customers respond to, rather than relying on generic advice.

That green “Add to Cart” button you love, your customers might prefer blue.

By testing alternatives, you discover what actually resonates with your audience. It validates customer feedback from on-site surveys and helps you adapt to seasonal changes and evolving shopping habits.

3# Creates a culture of continuous improvement

The most successful eCommerce businesses don’t rest on past successes—they’re constantly optimizing. Amazon runs thousands of tests annually, refining even the smallest elements of their shopping experience.

4# Increases ROI on existing traffic

Driving traffic to your site is expensive. A/B testing for websites helps maximize revenue from visitors you already have, often proving more cost-effective than acquiring new traffic.

Most eCommerce sites convert at just 2-3% on average. This means 97% of your visitors leave without purchasing anything. Even small improvements in this rate can dramatically impact your bottom line.

A shift from 2% to 2.5% conversion isn’t just a 0.5% increase—it’s 25% more revenue from the same traffic.

Read more: 12 Tips to Lower Your Customer Acquisition Cost

5# Reveals unexpected insights

Sometimes tests deliver surprising results that challenge industry “best practices.” What works for others might not work for your unique audience. A/B testing helps you discover your specific conversion formula.

The beauty of A/B testing lies in its simplicity: small changes, measured precisely, can yield significant results. A different headline, a repositioned call-to-action, or a streamlined checkout process might be all that stands between your current performance and substantial growth.

Next, we’ll learn—what exactly you can test in A/B testing.

Which Elements to Test in A/B Testing?

Almost any element on your website can be tested. But with limited time and resources, you need to focus on elements that truly move the needle.

Desktop-Specific Elements to Test in eCommerce

Here are the key areas worth testing for desktop users:

| Element | What to Test | How to Test | Impact |

| Call-to-Action Buttons | • Button text (“Add to Cart” vs “Buy Now”) • Color and contrast • Size and shape • Placement on page | Test one variable at a time; measure both click rates AND completion rates | 5-15% conversion lift; higher on mobile |

| Product Images | • Product-only vs lifestyle imagery • Number of images shown • Image size and quality • Zoom and 360° features | A/B test on high-traffic products first; use heatmaps to see which images customers focus on; fashion/apparel sees higher impact from image optimization | 10-30% conversion improvement for optimized imagery |

| Checkout Flow | • Number of steps/pages • Form field requirements • Guest checkout options • Progress indicators | Record user sessions to identify abandonment points; tests typically need 2-3 weeks for reliable data; tool recommendation: Optimizely or VWO | 20-60% reduction in cart abandonment possible |

| Price Presentation | • Discount visualization • Showing/hiding original prices • Payment option visibility • Shipping cost presentation | Test with different customer segments—price sensitivity varies by audience; luxury brands require different approaches than value retailers | 5-25% conversion increase; higher for discount-sensitive categories |

| Product Descriptions | • Length and format • Technical specs vs benefit-focused • Bullet points vs paragraphs • Tone and vocabulary | Analyze customer service questions to identify information gaps; technical products benefit from more detailed specs than fashion items | 5-15% conversion improvement; higher for complex products |

| Social Proof | • Review display formats • Testimonial placement • Trust badges and certifications • User statistics (customers served, etc.) | Test both placement AND format; new brands see higher impact than established ones; tool recommendation: TrustPilot integration or Yotpo | 15-40% increase in conversion for new visitors |

| Navigation | • Menu structure and labeling • Filter and sort options • Mobile navigation patterns • Search bar prominence | Combine A/B tests with user testing; navigation tests typically need larger sample sizes (1-2 weeks minimum) | 5-25% increase in pages per session; 10-20% conversion lift |

| Upsell/Cross-sell | • Recommendation algorithms • Presentation timing • Visual display format • Number of items shown | Measure both click-through AND conversion rates; fashion and accessories see higher cross-sell success than electronics | 5-15% increase in AOV; up to 30% in related categories |

| Page Speed Elements | • Image optimization • Lazy loading implementation • Mobile performance • Critical rendering path | Test speed optimizations on highest-traffic pages first; tool recommendation: Google PageSpeed Insights with real user monitoring | 1-5% conversion improvement per second of load time reduction |

| Email Capture | • Popup timing and triggers• Incentive offers • Form fields required • Design and messaging | Test both conversion rate AND email quality; exit-intent popups typically outperform immediate ones; test duration: 1 week minimum | 3-10% increase in email captures; ROI extends beyond immediate sales |

| Search Functionality | • Autocomplete features • Results layout • Zero-results handling • Filter options in results | Analyze search logs to identify common queries; tool recommendation: Algolia or Elasticsearch; test requires larger traffic (2+ weeks) | 15-40% higher conversion from search users after optimization |

As you can see, the table above outlines key elements to test on your eCommerce site, organized by importance and potential impact.

The most valuable A/B tests address specific customer pain points or business objectives rather than random changes.

So, this is for desktop users. Next is for the mobile user.

Mobile-Specific Elements to Test in eCommerce

Mobile shopping experiences require specialized optimization strategies because the mobile conversion rates are typically lower than desktops.

Here’s what to test to optimize mobile shopping experience:

| Mobile Element | What to Test | How to Test | Impact |

| Touch Targets | • Button size (minimum 44×44px) • Spacing between clickable elements • Hit area expansion beyond visual bounds | Test with multiple devices and screen sizes; focus on thumb-reach areas on the screen | 5-15% reduction in cart abandonment |

| Mobile Navigation | • Hamburger menu vs. tab bar • Bottom navigation vs. top • Persistent vs. collapsible categories • Search prominence | Prioritize top 3-5 destinations; combine A/B tests with usability testing; test duration: 1-2 weeks | 10-30% improvement in pages viewed and time on site |

| Page Layout | • Single column vs. grid layouts • Content prioritization • Scroll distance to key information • Sticky elements (headers, CTAs) | Use heatmaps to understand scroll depth and engagement; test on both Android and iOS | 5-20% increase in conversion rate |

| Form Input | • Form field size and spacing • Specialized keyboards for different inputs • Single fields vs. multi-field forms • Auto-fill implementation | Focus on reducing typing; enable proper input types (email, phone, etc.); essential for checkout optimization | 20-35% improvement in form completion rates |

| Product Images | • Image size and quality • Swipe gestures vs. dots/arrows • Image zoom functionality • Gallery vs. carousel presentation | Test different image gestures; ensure pinch-to-zoom works properly; consider vertical scroll galleries | 8-15% increase in conversion rate |

| Mobile CTAs | • Floating vs. inline buttons • Size and contrast • Text length and clarity • Position (top vs. bottom) | Test thumb-friendly zones (bottom corners accessible with one hand); sticky CTAs often outperform inline | 10-25% increase in click-through rates |

| Content Presentation | • Accordions vs. expanded content • “Read more” implementations • Tab systems vs. scrolling • Content chunking strategies | Balance between information density and scannability; test reveals higher impact in complex products | 5-15% improvement in engagement metrics |

| Loading Strategies | • Skeleton screens vs. spinners • Lazy loading implementation • Image quality vs. speed • Progressive loading sequence | Test on actual 3G/4G connections; tool recommendation: WebPageTest with throttling | 5-12% reduction in bounce rate |

| Mobile Search | • Search bar size and placement • Voice search options • Results formatting • Filter presentation in search results | Ensure autocomplete works well with touch; voice search shows increasing impact; typical test duration: 7-10 days | 15-30% improvement in search usage and conversion |

| Mobile Checkout | • One-page vs. multi-step • Form field sequence • Payment options prominence • Address verification methods | Prioritize mobile wallets and simplified payment; test with actual transactions; highest ROI test area | 15-40% reduction in checkout abandonment |

When planning your A/B tests, prioritize elements that directly impact your specific conversion goals. If your traffic is predominantly mobile, start your testing program here rather than on desktop.

Also, make sure to keep your eyes on the mobile testing best practices:

- Test on actual devices – Emulators and responsive testing don’t catch everything

- Consider network conditions – Test on both WiFi and cellular connections

- Focus on thumb zones – Optimize for one-handed usage patterns when possible

- Prioritize speed over features – Mobile users are more speed-sensitive than desktop users

- Test across platforms – iOS and Android users often show different behavior patterns

- Segment by device type – Phone vs. tablet behavior can differ significantly

Mobile optimization often delivers higher ROI than desktop testing due to typically lower baseline conversion rates and higher abandonment rates.

The most successful testing programs are methodical rather than random, focusing on elements that address known customer pain points or business objectives.

So, now let’s move further to know what the best process for A/B Testing is.

How to Conduct Effective A/B Testing?

A/B testing might seem like a complex task, but it’s just a structured way to answer the big “what if” questions about your eCommerce store.

- What if the button was orange instead of blue?

- What if the checkout process had fewer steps?

- What if there was a guest checkout?

The good part is, you can conduct the A/B Testing easily with the right process.

Here’s how to get started with eCommerce A/B testing:

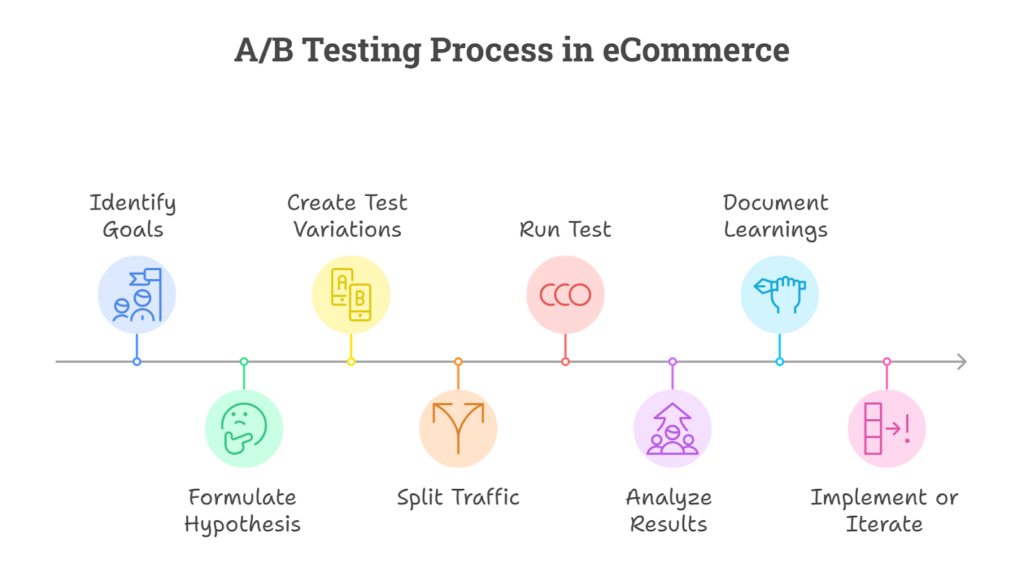

1. Start With Your Goals

Before you start changing things, ask yourself: What do I want to fix?

Here’s what it could be:

- Are you trying to increase add-to-cart rates?

- Do you want to improve checkout completion?

- Are you aiming to boost average order value (AOV)?

The trick is to keep your goals simple and measurable. Maybe you want to increase add-to-cart rates by 10%. Or maybe you want fewer people abandoning their carts halfway through checkout (because honestly, that hurts). Whatever your goal is, make sure it’s crystal clear.

And here’s a pro tip: Start with the parts of your site closest to the purchase decision. Fixing something on your checkout page will likely have a faster impact than tweaking your homepage banner.

2. Form a Hypothesis

A hypothesis is basically your test’s “why”. It’s not just random tinkering—it’s an educated guess based on data, logic, and maybe a little intuition.

A good hypothesis follows this formula:

“By changing [element] to [variation], we expect [metric] will [increase/decrease] because [reasoning].”

For example:

- “By changing our ‘Add to Cart’ button from blue to orange, we expect click-through rates will increase because orange creates stronger visual contrast.”

- “By reducing the number of checkout steps from three to two, we expect checkout completion rates will improve because it simplifies the process.”

Your hypothesis should be specific and testable. If it feels vague or overly complicated, simplify it—because clarity wins every time.

3. Create Your Test Variations

This is where creativity meets science.

Here, you’re going to create two versions of whatever you’re testing:

- Version A – the original (control)

- Version B – the new version (variation)

But here’s the golden rule: Don’t get carried away.

Testing too many changes at once is like throwing five different spices into a recipe—you won’t know which one made it taste better (or worse).

For example: If you’re testing button colors, don’t also change the font size or placement at the same time.

Keep everything else identical so you can isolate what actually made a difference.

Must read: Cart Page A/B Testing Ideas

4. Split Your Traffic Properly

Once your variations are ready, it’s time to divide and conquer—literally.

A/B testing works by splitting your audience into groups: one sees the version A (original), and the other sees the version B ( new variation).

There are some things to consider if you wish to get an unbiased outcome:

- Visitors should be randomly assigned to ensure unbiased results.

- The same visitor should always see the same version throughout the test.

- Ensure traffic is split evenly unless running an uneven split test (e.g., 70/30).

Most A/B testing tools handle this automatically, but double-check that everything is set up correctly.

5. Run Your Test for the Right Duration

What happens when you end an A/B test too early?

You miss out on the full story—how your customers behave over time, how different days of the week affect your results, and whether your changes really stick.

To avoid this, you should run your tests for at least 1-2 full business cycles. This usually means 2-4 weeks, depending on your store’s traffic patterns. It might seem like a long time, but trust me, it’s worth it.

You want to capture those daily and weekly fluctuations that can skew your results if you’re not careful. If your store sees spikes during weekends or holidays, make sure your test captures those patterns.

6. Analyze Results Carefully

When your test finally concludes, don’t just glance at the numbers—dig deeper. This is where the magic happens, and you get to uncover some really cool insights.

First, check if your results are statistically significant. You need to be sure that any changes you see aren’t just due to chance. Then, look at how different segments of your audience responded.

- Did mobile users behave differently from desktop users?

- How about new visitors vs. returning ones?

These segments can give you clues about what’s working and what’s not.

Also, examine the entire conversion funnel. Just because you increased clicks on a product page doesn’t mean those clicks turned into sales. You need to see how your changes affected the whole journey.

And don’t forget to combine your data with customer feedback. Numbers tell part of the story, but hearing directly from your customers can add a whole new layer of understanding.

Lastly, segment your results to see what works for different groups. What resonates with new visitors might not work for returning customers, so it’s essential to personalize your approach.

7. Document and Share Learnings

Every test—whether it’s a success or not—is a chance to learn something new about your customers.

So, create a simple documentation process that captures everything:

- Your hypothesis (what you were trying to prove)

- The test setup (what you tested and how)

- The results (with screenshots or charts)

- Key takeaways (what worked and what didn’t)

- Recommendations for next steps (what to test next)

Document everything.

This creates a knowledge base that helps you avoid repeating unsuccessful tests and builds a foundation for future experiments.

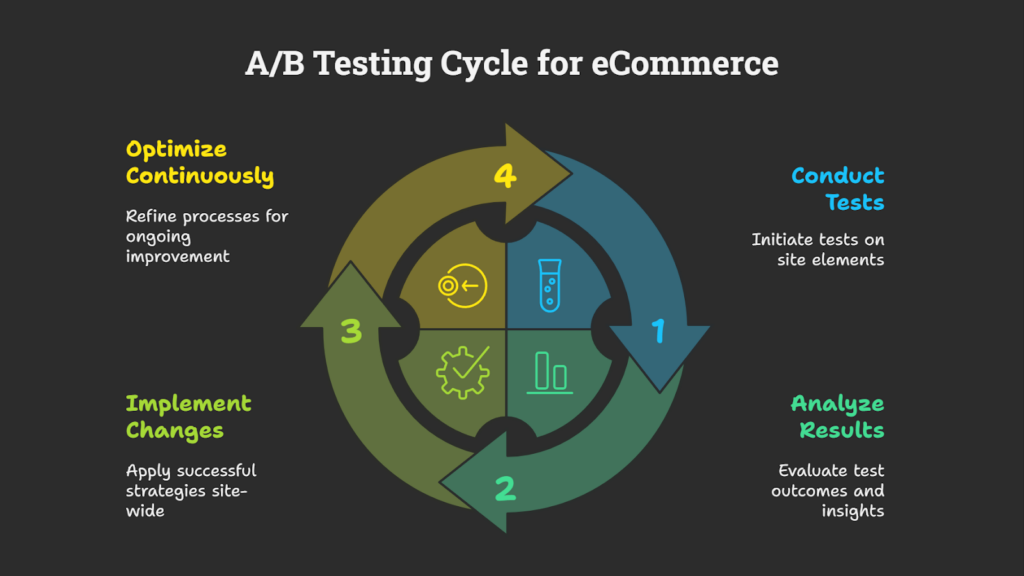

8. Implement Winners and Iterate

If your variation wins, roll it out permanently and look for ways to refine it further. If it doesn’t work out, don’t worry!

Analyze what went wrong, and use those insights to create a new hypothesis and plan your next test.

Remember, even “failed” tests give you valuable information about what doesn’t work—and that’s just as important as knowing what does.

This is the complete process you need to make your A/B testing successful. However, the success depends on the implementation as well.

So, you need to implement it correctly and also avoid some common mistakes that can cause undesired results. We shall discuss this in the next section.

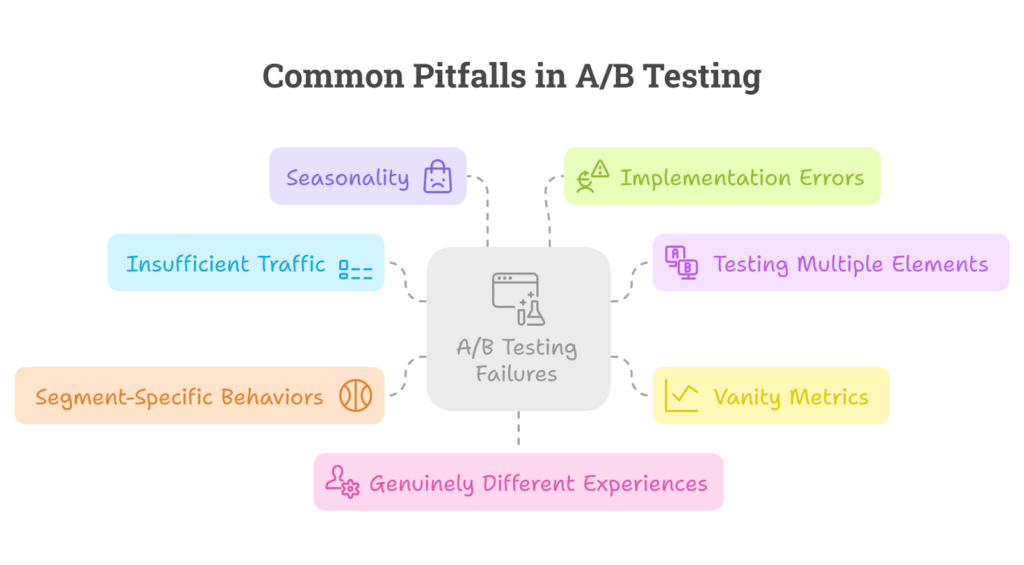

Why does A/B Testing fail?

Understanding why tests fail is the first step toward building a successful testing program.

Here are the common pitfalls that could be the reason for your A/B testing failure if not tackled smartly:

1 — Insufficient Traffic and Premature Conclusions

Perhaps the most prevalent mistake is concluding tests before they’ve collected sufficient data.

For statistical significance, you need adequate sample sizes – something many smaller stores struggle with. When you end tests too early, you risk implementing changes based on random variations rather than genuine user preferences.

For example, if your product page only receives 100 visitors per week, you might need to run tests for several weeks to gather meaningful data.

Patience is essential here – rushing to implement changes before reaching statistical significance often leads you to misguided decisions. And, that can actually harm your conversion rates.

2 — Testing Too Many Elements Simultaneously

The “A/B” in A/B testing means comparing just two versions – yet many merchants fall into the trap of testing multiple elements at once.

When you change the button color, headline, and product image all at once, you can’t isolate which specific change influenced customer behavior. This multivariate approach requires exponentially more traffic to achieve reliable results.

So, you should start with simple, focused tests that examine one element at a time to clearly understand what drives your conversions.

3 — Focusing on Vanity Metrics

Too often, merchants celebrate improvements in metrics like page views or time-on-site without confirming whether these translate to actual revenue growth. Not all eCommerce metrics carry equal weight in determining business success.

A redesigned homepage might keep visitors browsing longer, but if it doesn’t mean more purchases. Is it truly successful?

Thus, always tie your testing objectives to concrete business outcomes like conversion rate, average order value, or revenue per visitor.

4 — Ignoring Segment-Specific Behaviors

You know what, treating all visitors as a homogeneous group overlooks critical insights.

- Your mobile users may respond differently to changes than desktop users.

- First-time visitors might prefer different layouts than returning customers.

- International shoppers might have different preferences from domestic ones.

When you analyze test results only in aggregate, you might miss that a change works wonderfully for one segment but actually harms conversions for another.

So, you can segment your test analysis to uncover these nuanced patterns.

5 — The Seasonality Trap

eCommerce is inherently cyclical.

I believe this because consumer behavior changes dramatically during holiday seasons, promotional periods, or even different days of the week. Testing during Black Friday Sale week will produce very different results than testing during a typical February weekday.

Many tests fail because they don’t account for these temporal factors. For reliable results, run tests during representative time periods or ensure your testing window captures a complete business cycle.

6 — Implementation Errors

Even the best-designed tests can fail due to technical execution problems. Common issues include:

- Inconsistent implementation across devices or browsers

- Slow-loading variations that bias results

- Tracking code errors that miss conversion events

- Testing tools conflicting with other site functionality

Always conduct quality assurance testing before launching experiments to ensure your variations display properly and that data collection is functioning correctly.

7 — Failure to Create Genuinely Different Experiences

Subtle changes rarely produce meaningful insights.

This means testing a slightly different shade of blue on your “Add to Cart” button might be easy to implement, but it’s unlikely to significantly impact customer behavior.

The most valuable tests often involve more substantial changes to customer experience, such as:

- Completely different checkout flows

- Radically simplified navigation systems

- New product page layouts that prioritize different information

Don’t limit yourself to cosmetic changes – be willing to test bold alternatives that could lead to breakthrough improvements.

In a nutshell, failure itself is valuable – learning what doesn’t work is just as important as discovering what does. Even “failed” tests contribute to your growing understanding of customer preferences and behaviors.

Next, we’ll look for the popular tools that you can use to conduct A/B testing.

What are the Best A/B Testing Tools for eCommerce?

Finding the right A/B testing tool can dramatically simplify your website optimization efforts. Let’s explore some of the most effective solutions available to eCommerce merchants of all sizes:

Here are the best tools for A/B Testing in eCommerce:

- Optimizely

- VWO (Visual Website Optimizer)

- AB Tasty

- Crazy Egg

- Kameleoon

1. Optimizely

A powerhouse in the enterprise testing space, Optimizely offers robust testing capabilities including multivariate testing, personalization features, and advanced targeting options. Their statistics engine provides quick, reliable results, and the platform scales well for large stores with significant traffic.

Best for: Larger eCommerce operations needing sophisticated experimentation across multiple pages and user segments.

2. VWO (Visual Website Optimizer)

VWO combines user-friendly WYSIWYG editor with powerful segmentation capabilities. Their platform includes heatmaps, session recordings, and form analytics alongside traditional A/B testing. The all-in-one approach makes it particularly valuable for merchants who want deep insights into visitor behavior.

Best for: Mid-size to large merchants seeking comprehensive testing with robust analytics and visitor behavior tracking.

3. AB Tasty

This European-born platform emphasizes accessibility and ease of use while maintaining enterprise-level capabilities. It includes AI-powered recommendations and personalization tools along with standard testing features. Their approach to customer success includes strategic guidance, not just technical support.

Best for: Growing eCommerce companies that want strategic support alongside testing tools.

4. Crazy Egg

While known primarily for its heatmaps and user behavior analytics, Crazy Egg has evolved into a capable A/B testing platform, particularly valuable for eCommerce. Their snapshot tool gives you visual insights into how visitors interact with your pages before you even begin testing. The platform combines user behavior tracking with testing capabilities, making it easier to identify testing opportunities.

Best for: Small to mid-size eCommerce merchants who want to combine intuitive user behavior analysis with straightforward A/B testing capabilities.

5. Kameleoon

A rising star in the testing world, Kameleoon offers a full-featured experimentation platform with AI-driven personalization. Their real-time engine allows for immediate adaptation to user behavior, which can be particularly valuable for eCommerce sites with rapidly changing inventory or promotions.

Best for: Tech-forward merchants interested in combining testing with advanced personalization.

You can find more tools as well for A/B testing online, but these are the popular ones.

Also, many eCommerce platforms offer native testing capabilities. You can explore various options available for you based on your eCommerce platform.

The most powerful testing tool is the one you’ll actually use consistently. A simple solution that becomes part of your routine will outperform an advanced platform that sits idle after the initial excitement fades.

Final Thoughts!

As we’ve explored throughout this guide, A/B testing isn’t just a technical exercise, it’s the compass that guides your eCommerce store toward continuous improvement.

Even when you’ve checked all the fundamental boxes—there’s always room to optimize how each element works together to create a seamless buying experience. The difference between a struggling store and a thriving one often comes down to this willingness to test, learn, and adapt.

While this guide provides the framework for implementing effective A/B tests, we understand that executing a comprehensive e-commerce testing program requires time, expertise, and resources that many merchants simply don’t have available in-house.

That’s precisely why we’ve developed our Conversion Rate Optimization (CRO) service that includes CRO-focused store design and A/B testing.

We don’t just run tests—we partner with you to implement a sustainable optimization program that continues delivering results long after our initial engagement. So, if you’re already looking for reliable helping hands, we’re here to help you.

Post a Comment

Got a question? Have a feedback? Please feel free to leave your ideas, opinions, and questions in the comments section of our post! ❤️